Beyond the Code: Our Protection Team’s Commitment to Transparency

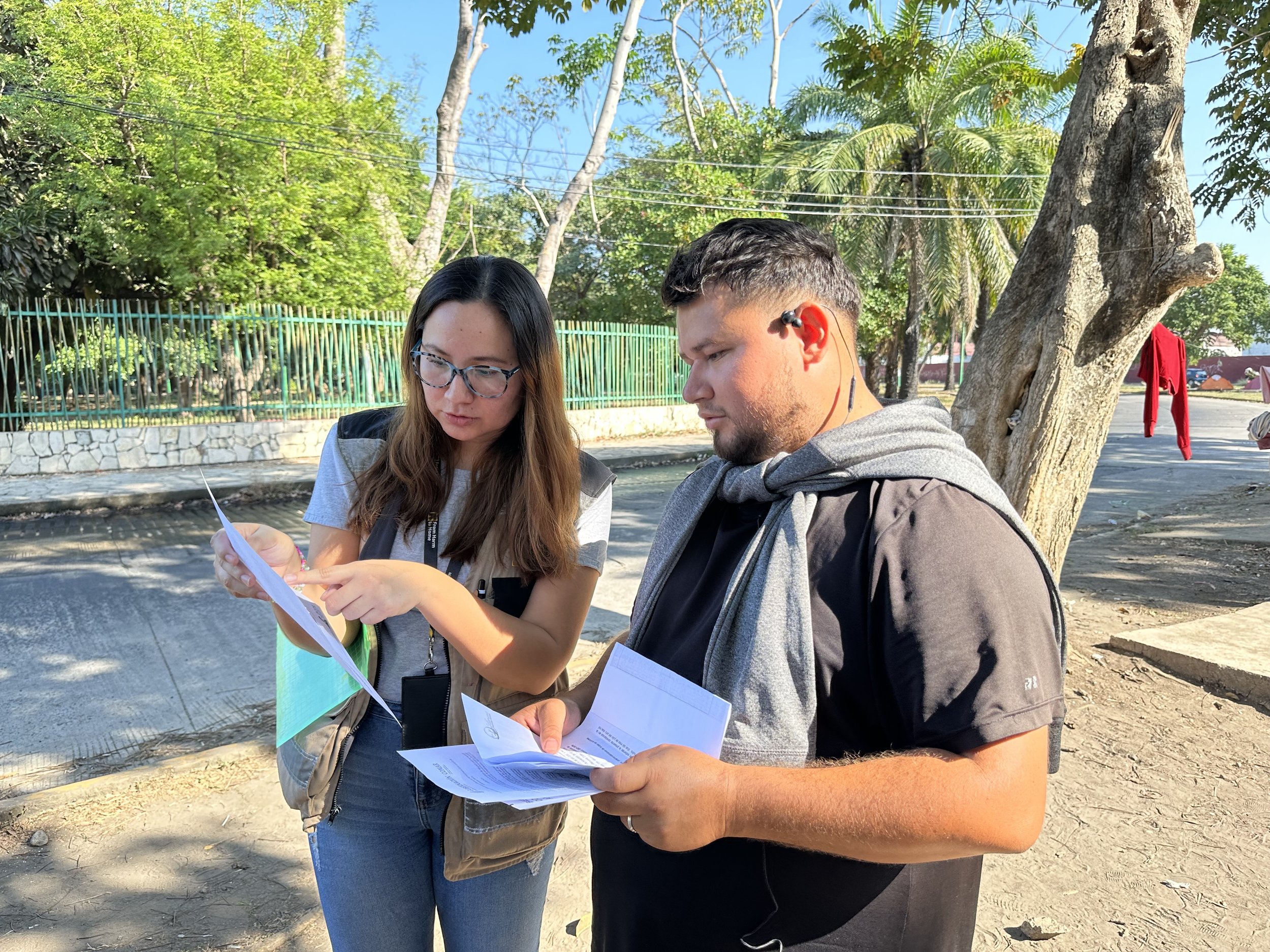

Today, technology is transforming humanitarian aid, but at the heart of it all, there’s something far more powerful than algorithms or code: trust. At Signpost AI, our protection team faces the unique challenge of building that trust every day, especially as we develop AI tools designed to support vulnerable communities. But let’s be honest—AI can feel distant, even intimidating. So, how do we create AI that people can trust? For us, the answer is transparency.

And we’ve found a simple, powerful tool to help make transparency part of our everyday work: weekly diaries. Yes, diaries! These aren’t just logs or updates—they’re the heartbeat of our process, capturing each insight, each adjustment, and each challenge as we work to make AI a trusted ally for the people we serve. Today, let’s look inside these diaries, explore what we’re learning, and discover where we’re heading. This isn’t just transparency on paper—it’s transparency in action, shaping the future of AI in humanitarian work.

Why Weekly Diaries?

Our weekly diaries started as a practical tool to keep track of the AI testing process, but they’ve evolved into so much more. They’re a space for reflection, for accountability, and for continuous learning. These diaries capture the real, often messy process of building a trustworthy AI tool, from testing to refining, to dealing with unexpected challenges. They’re a blueprint for building trust, a bridge between technology and humanity.

Every week, as the Greece and Italy Country Pilot Lead and TTA Protection Officer, I capture the details of our progress, record the team’s feedback, and note down observations from the moderators we support. These weekly reflections are a way to invite everyone involved—moderators, partners, developers—into the journey with us. They allow us to make sure the tools we build are grounded, relevant, and always improving.

Inside the Weekly Diaries: What Do They Capture?

So, what exactly goes into these diaries? Each week, we follow a simple structure that makes our journey visible and traceable. Here’s a peek at what’s inside:

Weekly Progress: This section captures the step-by-step evolution of our AI tool, from testing sessions to updates on AI responses. It’s a way to track each milestone, each adjustment, and each decision that moves us forward.

Transparent Communication: Building trust isn’t just about making good tools—it’s about open communication. In these entries, I document how we keep our team informed, explaining why certain changes are made and sharing the thinking behind each decision. It’s all about helping our team connect with the “why” of our work.

Quality Assurance Insights: AI tools need rigorous testing to meet our standards, and every entry includes details from our quality checks. We’re evaluating the tool from every angle, testing it with real and synthetic prompts to make sure responses are accurate, sensitive, and safe.

Challenges and Growth Moments: Transparency isn’t just about showing the polished parts; it’s about being real. When moderators tell us something isn’t working, we listen. These entries capture that honesty and help us make room for feedback, balancing structure with flexibility.

This structure helps us track patterns, celebrate the small wins, and make changes that keep our work relevant. It’s transparency at work, in a very real and human way.

What We’re Learning Along the Way

The diaries have taught us some valuable lessons about the power of flexibility and open communication. For example, as we’ve seen, flexibility is essential as we work with our moderators, finding ways to make their feedback process less time-consuming but still impactful.

And the impact of transparency itself has been incredible. At first, some of our moderators were understandably cautious, unsure of how the AI tool could help them. But as they saw the process unfold and began to understand the tool’s potential, their engagement grew. Some moderators now proudly shape the tool, offering feedback that makes a real difference in how AI responds. That’s trust-building in action, right there.

Looking Forward: Growing Our Transparency

Our journey with transparency is ongoing, and in the months to come, we’re planning to dive even deeper. We’re refining our diaries, listening to feedback, and exploring new ways to share our insights with others.

One idea we’re excited about? Sharing parts of these diaries with a broader audience. Imagine if we could open up these entries—anonymized, of course—to show the world the rigor, the ethics, and the human-centered work behind every AI tool we build. By sharing this process, we’re building a stronger connection to the people who will ultimately benefit from these tools.

We’re not doing this alone. Involving partners like Zendesk, who share our commitment to responsible AI, makes this journey even more exciting. Together, we’re creating a community focused on transparency and trust, working toward a future where AI isn’t just smart—it’s a tool you can rely on, a tool that’s grounded in human values.

A Final Thought: AI as a Tool for Good

I’ve seen firsthand that AI can be incredibly powerful, but it’s just a tool. When combined with human insight and ethical oversight, AI has the potential to create positive change in ways we’re only beginning to see. This vision drives everything we do at Signpost.

As we continue this journey, we’re not just building AI—we’re building trust, the kind of trust that opens new doors for AI to make a real, human-centered impact on the world.